Technology is Like a Pot Roast…

By Jeff Finkelstein

Since the Broadband Library Fall issue coincides with SCTE, I wanted to address a problem foremost in many cable operator’s minds. That question of course is how to make a good pot roast.

There are few comfort foods that remind us of our halcyon days of yore like pot roast. I remember as a child the smells of a cooking pot roast permeating the house on Sunday and looking forward to an exquisite meal at dinner time. My mother had a preternatural ability to slice the meat so paper-thin it would melt in your mouth. I’ll even share my recipe handed down from my Bubbe to my mother and then to me with you, if you drop me an email. I’ll save you the years of angst growing up in a Jewish family in Philadelphia.

Why pot roast?

Why indeed? There is no magic to pot roast. You basically roast it low and slow in a Dutch oven such that the fat renders a wonderful taste throughout the meat, throw in a few root vegetables for flavoring, and voila! Instant childhood memories.

And yet, there is something magical about it. The smells are so tempting to make one want to peer into the pot and maybe sample it, but you realize as a cook that you need to leave it be and to not disturb the flavors rendering inside that magical device.

In many ways technology is similar. We spend years considering what the future of the consumer will demand in technology, capacity, speeds, and support. Significant hours are spent in discussions internally and externally, with operators, silicon vendors, OEM partners, CableLabs, and countless others. We prognosticate like Punxsutawney Phil on Groundhog Day, often with similar results. (And in my most humble option, good old Phil is probably as accurate as any professional weather forecaster.)

And yet we still try to rush technologies into deployment and set dates for when we want to begin deploying the new gadgets. All the while we want new products to cost the same or less than current technologies.

But like a good pot roast, you need to let things simmer a long time for them to taste their best.

Which leads me to one of the most important rules in my “Jeff’s Rules of Technology” list…

Rule #3: Amateurs guess, then apologize afterwards

Or in the case of Punxsutawney Phil, “Groundhogs guess, never apologize, and still get fed like Groundhog kings”. Guessing is fine for an adorable Marmota Monax (aka woodchuck or groundhog), but for the rest of us with responsibility to the financial and technological well-being of our respective companies, let alone making sure our deployed technologies will support future customer demands, the expectations are significantly higher.

Perfection is imperfection

There is a fine line between having enough data to produce a model that provides reasonable guidance towards a future point and one that is perfect. At one time or another we have all been stuck in the infamous “analysis paralysis” mode where we continue to analyze innumerable permutations of a given dataset trying to determine where things will land at a future point in time. In a never-ending search for perfection in our planning we too often are unwilling or unable to accept that we will never obtain a “perfect” model or prediction.

To quote Vince Lombardi, “Perfection is not attainable, but if we chase perfection we can catch excellence.” Predicting future trends is not about making binary decisions. The humanity we add to the models guarantees them to be imperfect, but at the same time adds to the greater likelihood of them being more accurate than a more data rich model may be.

All too often we want to use interpolation to determine future direction, but by definition interpolating is determining a value between two known values. When setting a course where the goal is fuzzy and uncertain, we need to turn to extrapolation since it is estimating or inferring a future value based on available data.

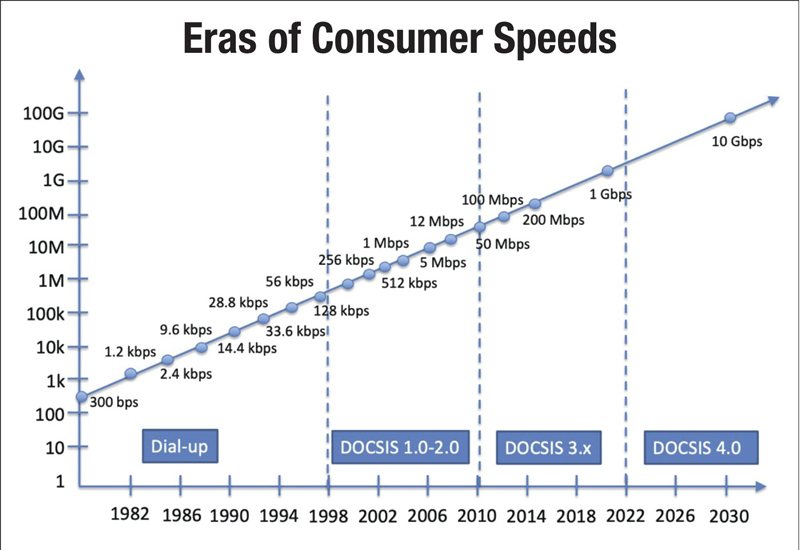

Here is a real-world example. Back in 2002 when I was lead engineer for our DOCSIS effort, I put together a model and slide to show how consumer speeds would progress over the next 30 years. It was based on an early version of the infamous “Cloonan” model, which many of us now use as a baseline for predicting capacity needs.

The final output of that 2002 model is shown below.

Not particularly pretty or overly deep thoughts, but it has eerily proven itself to be accurate the 20 years since it was put together. Plenty of corporate anti-bodies have denied its accuracy since it did not have a bazillion data points, or a team of analysts and consultants, nor did it have hundreds of pretty slides to talk through. Just a simple graph based on 20 years of data connected by a straight line that was pushed out another 30 years.

Has it been accurate so far? Seemingly so. Will it continue to be accurate? You be the judge.

Unreasonable customers?

As we make our way out of the madness of the past 18 months and analyze the data collected as we struggled to meet customer demand, here is another rule.

Jeff’s Rule #22: Unreasonable customers with unreasonable demands get us to do unreasonable things in an unreasonable time

There has been quite a bit of discussion and will continue to be much talked about, along with some great presentations at this year’s SCTE Cable-Tec Expo that will touch on the learnings during the pandemic. Those are important lessons we should try to apply towards future planning.

Some of my personal take-aways from the recent past can be summed up in a few of my rules as follows:

Rule #17: We have no control over how customers use their bandwidth

We know that now a typical customer at peak time uses approximately 3.5 Mbps downstream and 350 kbps upstream. Peak usage during busy hours is around 35 Mbps downstream and 3.5 Mbps upstream. While we do have some insights into what it is being used for during those times (primarily OTT video, gaming, and video chats), it varies greatly by customer.

Rule #38: There is an established order to things, but sometimes it can get mixed up

Just when we think we understand things, it gets all jumbled up. Fortunately, there is enough consistency at the aggregate level that it does not impact the directionality of the plan of record, but we need to be prepared for those special unplanned events.

And finally…

Rule #31: So far, no amount of software has created a single photon

There is a tremendous amount of truly pioneering work being done in virtualization. However, it was the hardware-based solutions, OSP passives and actives, and our ability to tweak every spare bit/second/Hz out of them that got us through the capacity and speed needs of the pandemic. The NCTA reports (https://www.ncta.com/COVIDdashboard) continue to show just how well we built out the cable networks and how resilient they were to consumer demand.

To wrap it up, we need to take the lessons learned and apply them to forward planning to continue meeting and exceeding our customers’ expectations.

Finally, I hope to see you at SCTE Cable-Tec Expo! And don’t forget the pot roast recipe.

Jeff Finkelstein,

Jeff Finkelstein,

Chief Access Scientist,

Cox Communications

Jeff.Finkelstein@cox.com

Jeff Finkelstein is the Chief Access Scientist for Cox Communications in Atlanta, Georgia. He has been a key contributor to engineering at Cox since 2002 and is an innovator of advanced technologies including proactive network maintenance, active queue management, flexible MAC architecture, DOCSIS 3.1, and DOCSIS 4.0. His current responsibilities include defining the future cable network vision and teaching innovation at Cox. Jeff has over 50 patents issued or pending. He is also a long-time member of the SCTE Chattahoochee Chapter.

Shutterstock