The Transformation of the Internet

By Jeff Finkelstein

The Internet has become part of the daily lives of millions and millions of users. In fact, most of us would have a difficult time going through our days without it. We use it to communicate, research things, play games, watch sports, movies and home-made videos, handle our finances, babysit our kids and pets, shop, food delivery, and more…

It is interesting that other than email, none of the ways we currently use the Internet was considered when the original developers came up with the idea of creating an international network for communicating with others. At the time, they were interested in creating an electronic version of the post office. What it has become is a way for us to interact with others at many different levels.

Predictably, there are some concerns with the current architecture of the Internet in that those current and future services that require low-latency or high bandwidth cannot be guaranteed end-to-end. The requirements of current and future applications need a deterministic and consistent network connection between end-points to deliver the applications both residential and business customers desire.

For example:

- Virtual reality requires 25 Mbps or greater throughput while maintaining a motion-to-photon latency of less than 20 ms

- Industrial applications, e.g., machine to machine or sensors to vehicles, require between 25 µs and 10 ms latency

- Tactile (haptic) applications require approximately 1 ms latency

- Vehicular networks require between 500 µs and 5 ms latency

The Internet as we know it today has no guarantee of packet delivery, let alone the deterministic latency required by bleeding edge applications. While we can control the transit of packets on our own service provider networks, the Internet is still the wild west when it comes to maintaining packet markings as they transit its many web-like paths.

So then, what does the Internet need to look like for future applications? First, let’s start with the applications themselves that futurists are predicting will be of importance. New media has large bandwidth requirements and requires changes from Mbps to Gbps to Tbps. Many businesses and some residential services need high-precision timing support, deterministic delivery and best-guaranteed services. The networks themselves need to cross not only the planet, but space as well as being federated and trustable.

New media, e.g., holograms and lightfield displays, could potentially use up to 19.1 gigapixels which requires approximately 1 Tbps throughput. A hologram the size of the average human (77 x 20 inches) uses around 4.6 Tbps.

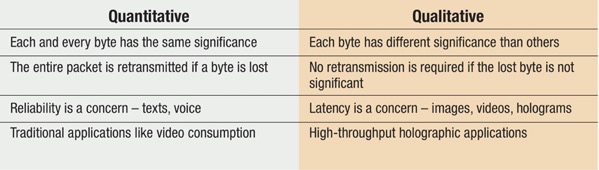

When it comes to video, we are seeing a shift from quantitative to qualitative digital information. Comparatively…

A leitmotif comes onto the scene as we review the network performance requirements of the next Internet: latency and jitter.

So why do latency and jitter matter? Simply put, time is money and money is time. For example, a one second slowdown per web page could cost Amazon $1.6B in sales a year. On Wall Street, a millisecond delay could cost $100M per year. In virtual and augmented reality, a latency of >= 20 ms results in dizziness. These examples do not begin to address the requirements of future technologies like remote surgery, cloud PLC or intelligent transportation systems. Part of our challenge is a better understanding of what the requirements of future applications will be and how we can manage them at the network layer.

We need a new way to handle the requirements of time-sensitive, current, and future applications. Today’s networks are designed for best effort traffic and depend on statistical multiplexing to scale the networks interfaces. The dilemma is that statistical multiplexing is not sufficient to meet the needs of these applications. We need a new way of handling packet delivery in absolute time. We need new user-network interfaces (UNI), reservation signaling (RSVP), new forwarding paradigm, an intrinsic self-monitoring and correcting OAM, a business agreement between the interconnection providers and a business model towards monetizing the network end-to-end to fairly distribute the payments for traffic transit.

New Communications for a New Internet

We have many protocols and packet markings available including MPLS, DSCP and others, that can assist in minimizing jitter and latency, but there are few technologies for determining the end-to-end flow of packets at an application level that crosses multiple providers and reports back to assist in performance measurement and working through a financial model for payment. We need a way of modeling packets transiting multiple networks in order to better understand the impact of a network based on application packet determinism.

Today’s switches likely cannot handle the latency and jitter requirements of tomorrow’s applications. We need new silicon for switches that can understand the new protocols and have the performance necessary for new requirements. Today’s routers may have the ability to differentiate services to the level required, but without a better understanding of what those requirements are, it is difficult to determine if changes will be needed.

We have not even begun to discuss the possibility of a future network paradigm including NTNs (non-terrestrial networks), i.e., networks that extend into space.

Conclusion

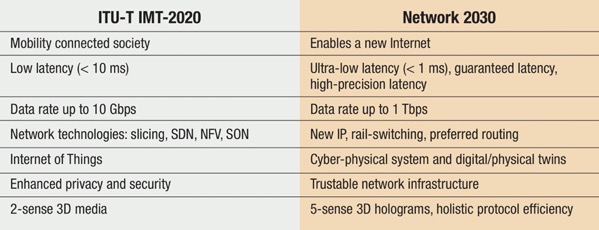

The table below includes a brief summary of today’s network versus the future state of the new Internet.

There is much work to be done if we want to get ahead of the future requirements of the Internet. A number of working groups are beginning discussions on these topics and more. Now is the time to get involved and have input into the definition and creation of the future Internet.

Jeff Finkelstein

Jeff Finkelstein

Executive Director of Advanced Technology

Cox Communications

Jeff.Finkelstein@cox.com

Jeff Finkelstein is the Executive Director of Advanced Technology at Cox Communications in Atlanta, Georgia. He has been a key contributor to the engineering organization at Cox since 2002, and led the team responsible for the deployment of DOCSIS® technologies, from DOCSIS 1.0 to DOCSIS 3.0. He was the initial innovator of advanced technologies including Proactive Network Maintenance, Active Queue Management and DOCSIS 3.1. His current responsibilities include defining the future cable network vision and teaching innovation at Cox. Jeff has over 43 patents issued or pending. His hobbies include Irish Traditional Music and stand-up comedy.

Shutterstock