E = MC2 (Energy = Milk * Coffee2)

By Jeff Finkelstein

In my last article for Broadband Library Fall 2017, I wrote about art and innovation. As part of the article I talked about how art imitates life. I’ve been thinking about that after a recent trip visiting operators in Europe and getting time to visit many great art museums. I spent a long time in front of one specific painting. After looking at it for quite a while, I realized that I was wrong and Oscar Wilde had it right in his 1889 essay “The Decay of Lying” when he said, “Life imitates art far more than art imitates life.”

His belief was that there are many things in life and nature that we do not see until art shows them to us. I thought about this while standing in front of the Van Gogh painting called “Sunflowers” at the Van Gogh Museum in Amsterdam.

Now, I’ve seen many sunflowers, have even grown them a few times. What I failed to notice is the simplistic beauty of the flowers. The Van Gogh painting shows sunflowers in all stages of life, from full bloom to withering. It is an optimistic statement on life from an incredible artist who brings out the very things we miss when we look at the flowers.

If it takes art to show us what was in front of us all this time, which is the imitator?

The Not-So Golden Rules

I started collecting my “rules” around 1981 and at this point have 24 or so of them. For this and subsequent articles, I am going to focus on a few each time.

Not all have proven to be accurate, in fact a few are downright wrong. Some were gathered from other sources (see the Fall 2017 Broadband Library issue for a cool quote from Albert Einstein), but most are statements I’ve come up with while trying to explain things or learn how to provide architectural guidance. We are all very busy of late and I found that short, direct statements have the most impact.

Continuing with riffing on my “rules” leads me to one first said in 1986 when the company I was writing UNIX kernel code for decided that desktops with UNIX would never replace monolithic proprietary architectures. The company sold mini and super-mini computers, small in comparison to mainframes, but significantly larger and costlier than the competition had created in desktop computers.

My company misjudged the customer wanting simpler distributed computing and not larger, more costly minis and super-minis. Sales dropped off and they eventually went the way of all companies that don’t listen to their customers.

My rule that came out of that experience simply stated is:

“Simple, modular architectures always win in the end.”

Mainframes to super-minis, super-minis to minis, minis to desktop, desktop to phone, phone to wearables.

While at the time it was written to address computers, you can look at the changes underway in the cable industry today and see its applicability. We have had periods of incredible growth in customer data consumption that our vendors have solved for us with technologies that scaled for many years.

But even with those scalable monolithic architectures, we still are up against the growth curve as we start climbing the asymptote of the CAGR (compound annual growth rate) curve at 49%. We cannot fit all the equipment needed over the next 10+ years to meet the growth into our existing facilities. Even if we could solve for the space problem, there is not enough power or cooling to support the increase in equipment without a significant expenditure.

You Can Break the Law, Just Not the Laws of Physics

While we may make equipment denser, two laws we cannot conquer (yet) are Koomey and Dennard. Koomey’s law states that the number of computations per joule of energy dissipated has been doubling every 1.57 years. This means that the amount of energy needed to provide a fixed computing load falls by a factor of 100 every 10 years.

Dennard’s law states that as transistors get smaller, their power density stays constant, such that power use stays in proportion with surface area. Both voltage and current scale down with length. Dennard’s law is starting to break because at smaller sizes current leakage is a challenge as chips heat up, which creates thermal runaway and increased energy costs. This breakdown forces silicon manufacturers to move to multi-core processors to improve performance. Which causes other issues, primarily in increased power consumption and CPU power dissipation issues. The result is that only some fraction of the integrated circuit can be active at a given point in time without violating power constraints. The unused portion of the chip is called dark silicon.

This creates significant challenges at scaling our monolithic architectures to meet the challenges of future growth. But by distributing functions to remote devices north, south, east and west, we can meet the needs of this growth.

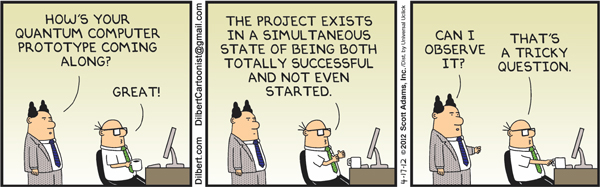

At least until quantum computing is available…

We Can’t Possibly Continue this Growth. Can We?

There has been significant work recently by CableLabs on humanizing the growth curve. For as long as I have been tracking and watching consumer consumption of bandwidth, there are those who have said that it would not continue at the CAGR of the day. I remember back in 1992 when I had a 56 kbps line installed at my house for a start-up I was working for at the time, I asked the IT director what the plan was to move up to T-1. His comment at the time was “What do you plan on doing? Running an ISP out of your house? You will never need more than 56 kbps!”

Of course, look at us today offering 1 Gbps downstream with more planned for future growth…

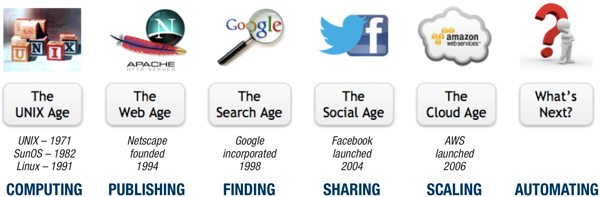

I attended a presentation a few years ago by Dr. Marcus Weldon of Nokia that discussed where cable was heading. One particularly brilliant slide is shown above.

We struggle to explain where bandwidth goes and why growth continues unbound. I think Dr. Weldon did an excellent job showing the ages of the Internet which puts a context around the growth and helps us understand where the bandwidth is being used. If that consumption continues as expected, then we need equipment to match that growth. We have been scaling current technologies for a long time, but we can see how it is struggling to keep up.

Which Direction?

The first step major step towards modularity in DOCSIS networks came in the form of M-CMTS. The modular CMTS decomposed the PHY from the MAC by using edge-QAM modulators to provide the physical layer. It also introduced the concept of DEPI (downstream external PHY interface) and tunnels, which were used to manage the data plane from MAC to PHY. As a result, we could now scale the PHY separately from the MAC, which allowed us to grow beyond what the monolithic architectures of the day could provide in a single chassis. In 2006 I was responsible for managing the deployment of M-CMTS at Cox and thanks to an extremely hard-working team got it done nationwide. It turned out to be quite a good decision as we have managed to scale it to support the services of today.

Fast forward from 2006 to 2009. Not resting on our laurels as an industry, we began discussions on how to further decompose functions from the CMTS into smaller constituent parts. The DAA (distributed access architecture) working group was formed under CableLabs supervision to think through and model the potential directions. The output from this WG led to an R-PHY spec, R-MACPHY technical report, and the basis for R-CMTS.

The purpose of this article is not to get into the discussion around which DAA is best. Each one has its own individual strengths and weaknesses, in the end we each make our own choices. What I am attempting to point out is that cable has a long history of solving future technology challenges from within. From analog to digital, single QAM to multi-QAM, MCNS to DOCSIS, sub/mid/high split, remote architectures, and more, we continue to move the needle on the technology curve to provide the services most important to our customers.

What we have proven through logical and pragmatic steps, is that cable not only has a long life, it has a long useful life. Our ability as an industry to transform cable technologies is simply amazing, I would even say scathingly brilliant.

Simple Is As Simple Does

By moving to simple, modular architectures we can now follow the rule:

“Simplicity at the edge, complexity in the core.”

This rule came to life when we were working on the X Window System at MIT in the mid 1980s. For those too young to remember, X11 was an architecture-independent system for remote graphical user interfaces and input device capabilities. Each person using a networked terminal could interact with the display with any type of user input device. X used a client-server architecture where the server was the display device and the client was the application requesting the rendering of graphics on the user device. A bit backwards from how we think about client-server today, but the point is the same: Logical decomposition of functions taking advantage of the most efficient technologies to provide each function.

I remember back in high school science class using a centrifuge to separate liquids of varying densities or solids from liquids. As the centrifugal forces work in the horizontal plane and the test tubes are at an angle, the larger particles move a short distance and hit the walls of the test tube, then slide to the bottom. Washing machines work with a similar technique to help wring the water out of clothes. The tilt-a-whirl ride in amusement parks does the same thing to our bodies, often with a dizzying result.

Our natural tendency is to push as much technology out as far as we possibly can to keep interconnected components together. Why go through the complexities of separating network layers when we have them so neatly packaged together? We can just keep making things smaller to avoid having to deal with breaking them apart.

Unfortunately, the immutable laws of Koomey and Dennard (see the earlier section on “You can break the law, just not the laws of physics”) kick in, which eventually force us to make a different choice. The simplest choice is to decompose network elements, placing them at the logical points in the network for their most effective and efficient placement. Decomposing the network elements is time-consuming and complex, but as we learned in the early 1990s with DCE (distributed computing environment) and DFS (distributed file system), there is an intrinsic elegance to distributing workloads throughout the network. It’s hard to do and even harder to do it right, but the result is worth the journey for scale, reliability and resiliency.

With distributed CMTS functions we may apply the same logic. While we could place the entire CMTS in the outside plant, the simplest components, relatively speaking, are the modulators and demodulators at the physical layer, even a small portion of the MAC like the upstream mapper.

As the need for compute intensity increases, those pesky Koomey and Dennard laws kick in. Because it takes a lot of energy to power the computations needed at the upper network layers, coming up with temperature hardened compute and network engines is complex and costly. No service provider wants to put air conditioned huts in the field, but we recognize at times we must do just that as equipment needed to provide essential services does not always lend itself to miniaturization.

The simpler equipment is that we put in the field, the easier it is to keep it cool. The cooler the equipment, the less energy used. The less energy used, the less heat generated. The less heat generated, the longer between temperature generated failures. By keeping the computationally significant equipment in our larger temperature and energy controlled facilities, we in turn reduce overall energy consumption at a grand scale by optimizing the equipment used to provide those services.

Keep it simple in the outside plant to maximize every watt of energy consumed.

Simple, no?

Jeff Finkelstein

Jeff Finkelstein

Executive Director of Advanced Technology

Cox Communications

Jeff Finkelstein is the Executive Director of Advanced Technology at Cox Communications in Atlanta, Georgia. He has been a key contributor to the engineering organization at Cox since 2002, and led the team responsible for the deployment of DOCSIS® technologies, from DOCSIS 1.0 to DOCSIS 3.0. He was the initial innovator of advanced technologies including Proactive Network Maintenance, Active Queue Management and DOCSIS 3.1. His current responsibilities include defining the future cable network vision and teaching innovation at Cox.

Jeff has over 43 patents issued or pending. His hobbies include Irish Traditional Music and stand-up comedy.

Vincent van Gogh, Oil on Canvas. Arles, France: August, 1888.

Image provided by author, Public Domain

Chart provided by author

DILBERT © 2012 Scott Adams. Used By permission of ANDREWS MCMEEL SYNDICATION. All rights reserved.