AI for Ad Ingest

By Srilal Weera, Ph.D.

Some enjoy TV ads and some don’t, but most are unaware of the amount of oversight involved in delivering these ads. Federal agencies such as FCC, FDA, FTC and FEC have a say in what can be shown (or rather what cannot be shown) to the public. In addition to the regulatory purview, contractual obligations with content owners also dictate the ad display. For example, if an alcohol ad is accidentally displayed during a Little League sports program, that can lead to heavy fines as well as bad PR for the content distributor (also known as the service provider or network operator).

To avoid such mishaps, trained teams manually examine tens of thousands of ads a month and quarantine the failed ones. The challenge for AI is how to automate that process with a machine learning engine embedded within the ad ingest workflow.

Multichannel video programming distributors (MVPD) are service providers that deliver video content to consumers. In the US it is a highly regulated industry. The term covers not only traditional cable companies, but any entity that provides TV service to consumers via fiber, coax, satellite, DSL or wireless. With the advent of Internet-based TV services, the moniker is modified as V-MVPD (virtual MVPD). The content distributors are still responsible for the displayed video content, including advertisements. This places the onus on the distributor to prevent the non-compliant content from reaching the TV audience. Such restricted categories would include ads featuring alcohol, firearms, gambling, drugs, violence as well as trademarks, copyrighted content, explicit content, political content, etc. Identifying these categories may pose a challenge to a machine learning tool, as off-the-shelf products are more oriented towards facial recognition.

In common usage, machine learning tools for video products do a multi-pass analysis with each pass identifying specific characteristics, such as faces, common objects, celebrities, etc. The results are presented as content descriptor metadata (labels). An accompanying “confidence level” indicates the accuracy of prediction. Customizing off-the-shelf products for carrier-class video applications requires a certain amount of post-processing, or else the results could be tainted with false positives and false negatives (the latter occurs when the machine learning tool misses a true signature).

Resurgence of contextual advertising

In the last two decades, cookie-based user tracking was the primary mode for targeted advertising. The latest privacy regulations, however, are making such data collection practices unacceptable. Advertisers are thus eager to find alternative means to promote their brands. Contextual advertising is deemed a suitable option as it does not infringe on personal data. However, that necessitates powerful AI capabilities for extracting contextual data from a scene.

Algorithms for video classification

Object detection and recognition are classification problems in machine learning. Compared to image analysis, video is inherently complex. While image analysis has only spatial dependence, video analysis involves the temporal component. The statistical algorithms generally used in the analysis are based on decision-trees, support vector machines (SVM), and k-nearest neighbors (KNN). The current trend, however, is for neural network-based algorithms. This is mainly due to two drivers: prevalence of large amounts of data for training and the availability of high speed GPUs for parallel computing.

Among the deep learning algorithms, convolutional neural network (CNN) is the workhorse for image classification. Note that a video is composed of a succession of images and the added time dimension makes the analysis more complex. A recurrent neural networks (RNN) algorithm has been successfully applied for such time series analysis. However, training a machine learning model based on RNN is susceptible to “vanishing/exploding gradient” problems. (These fancy terms stem from the basic definitions for gradient (dy/dx) with Tan 0° as 0, and Tan 90° as ∞). In a neural network with a large number of hidden layers the above problem is compounded and may lead to training errors. While there are improvements (e.g., long short term memory — LSTM), the point being made here is that the algorithmic approach alone may not be sufficient, or too costly.

Another recent challenge for the traditional black-box model of AI is the ‘model interpretability’. A neural network may easily classify a photo of an animal as a cat or dog, but is silent about why it made that decision. Explainable-AI, (or the related term, Interpretable-AI) is a fertile research area that delves into the AI decision-making conundrum. Prof. Aleksander Madry of MIT explained this issue at a recent presentation to the SCTE AI/ML working group.

Updating millions of parameters (weights and biases) associated with hidden states in a neural network takes time. In the present example for instance, searching each video frame for a multitude of restricted categories is time consuming. It could also be irrelevant as well. (In the case of a beer ad, searching for all manners of firearms or drug paraphernalia would not be productive).

One way to refine the results is to add a software engine to the workflow as described below.

Heuristics based solution

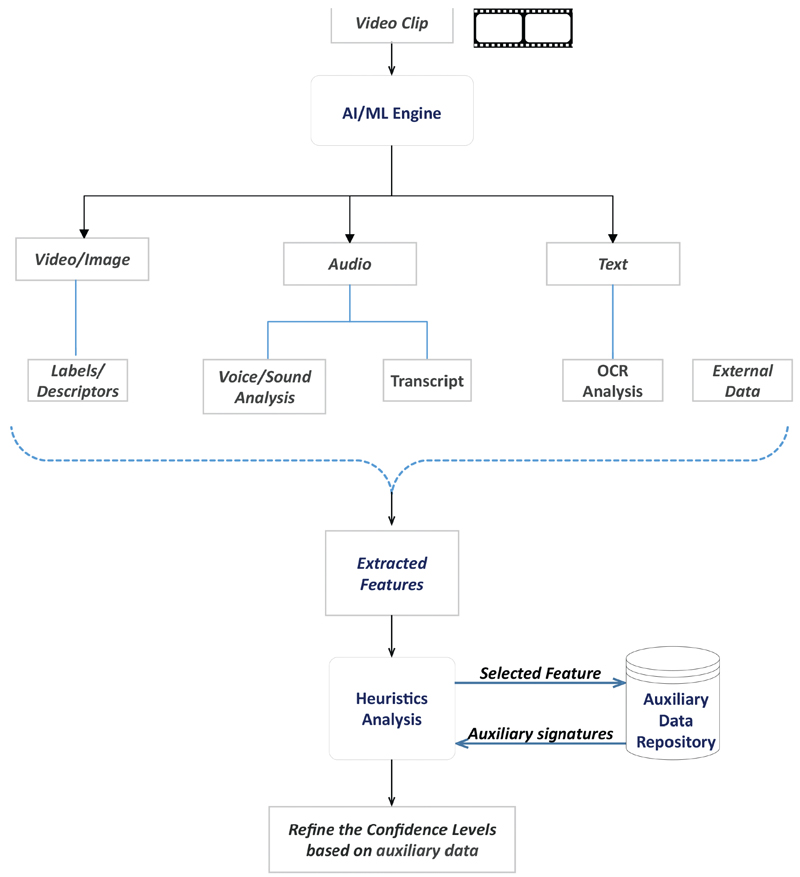

Machine learning products generally treat metadata of each stream separately; e.g., video/image analysis is separate from audio or text analysis, albeit each may use neural networks based classification algorithms. Thus interrelating the video, audio and textual data could enhance the accuracy of predictions.

Figure 1 illustrates a workflow with an embedded decision module to accommodate heuristic analysis. ‘Heuristic’ in the present context would mean an educated guess based on supplementary data. It is not a rigorous deterministic algorithm, but yields results in a reasonable time.

Figure 1. Improving machine learning analysis with heuristics

In this case the machine learning engine parses the video clip and performs a multi-stream analysis. The output is a JSON file with content descriptors and associated confidence levels per each detection. The next stage is the heuristic analysis. For each of the features identified, other streams are scanned for auxiliary signatures, comparing with a pre-defined data repository. Based on the findings, the confidence levels are then refined.

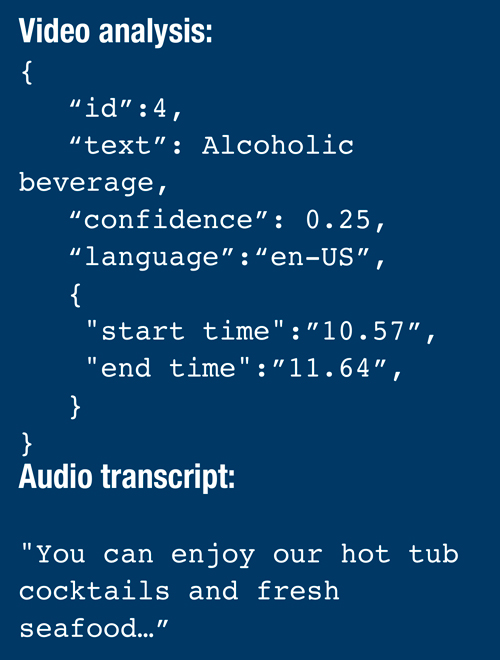

As an example, consider Figure 2, which is a screen shot from a resort ad video clip. The task is to determine if the ad is related to alcohol. As the JSON output indicates, the confidence level for the alcoholic beverage detection was only 25%, perhaps because the drink looks similar to iced tea. However, the auxiliary signature (word “cocktails”) is detected in the audio stream which bumps up the confidence level to over 60%. Using such tell-tale signs, the false positive/negative impacts are mitigated.

Figure 2. Alcoholic beverage detection

Although the present discussion is on digital advertising, the analysis applies to video analysis in general. Artificial neural networks loosely mimic the functioning of biological neurons. Extending the analogy a step further: When the human brain receives a plausible signature from one of the streams (visual, aural, olfactory, gustatory or haptic/tactile), the normal behavior of the brain is to seek supplementary evidence, i.e., auxiliary data from other streams, to validate its initial detection.

Figure 3. JSON output from image and audio analysis

The solution outlined above posits a similar functionality adapted to multi-stream video analysis.

Srilal Weera, Ph.D.

Srilal Weera, Ph.D.

Charter Communications

Srilal chairs the AI/ML working group of SCTE. He is a principal engineer in the Advanced Engineering group at Charter and previously worked at AT&T Labs. Srilal has many patent filings as an inventor and is also USPTO certified (patent bar). He received BSEE degree from University of Sri Lanka and MS, PhD from Clemson University. His background is in computational data modeling and voice/video networks and services. He was trained at Oak Ridge National Lab and NASA Goddard Center. Srilal is an IEEE Senior Member and has served as an ABET accreditation evaluator for EE degree programs.

Shutterstock