Video Interoperability with IP Video

By Stuart Kurkowski and Joel Derrico

As we look to the future of content delivery, many requirements are driving the system design required for Internet delivered video (Internet Protocol, or IP video). These requirements are not new when we think of digital multichannel video programming distributors (dMVPDs), Television Everywhere (TVE), and over-the-top (OTT), but there are some emerging requirements that are driving examination of workflows – to look at IP video delivery differently with a focus on video interoperability. If we use this opportunity to build the right infrastructure, we can leap a whole generation forward with end-to-end IP video delivery. In this article we discuss this end-to-end system at a high-level, identifying technologies and standards that can get us to this exciting new architecture for video interoperability.

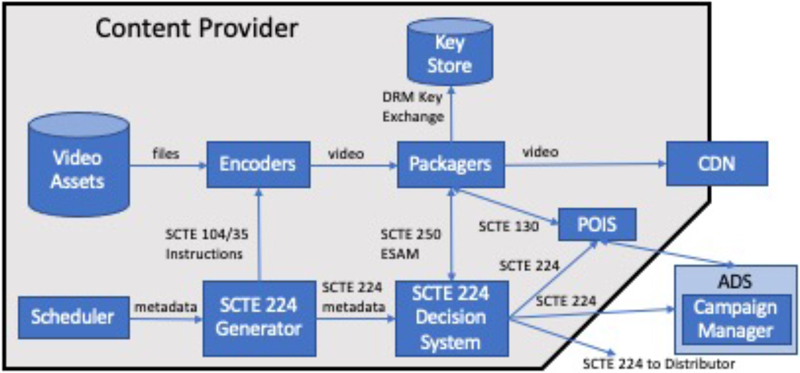

Content providers (CPs) already have content in digital format(s), but the traditional method of sending that content data via satellite is being rapidly changed by FCC C-band reallocation and the growing quantity of 4K (UHD) and 8K content. CPs are looking at new ways for delivery of content via the Internet. Figure 1 outlines the major steps, components, and technologies used in this proposed process of getting high quality video and audio from CPs to the CDN.

Figure 1. Content provider flow

The CP portion of the workflow has the first key to producing interoperable video. Adaptive bitrate (ABR) encoding and transmission to their content delivery network (CDN) are processes the CPs already support. Because this video will ultimately be served to a wide variety of playback devices each with its own screen resolution, available network, processing power, capacity and various distributor workflows, the content must be encoded in multiple forms before arriving at the CDN. As part of the encoding and packaging process, the linear channel scheduler will generate channel metadata including traditional content metadata (SCTE 236), program identifiers, start and end times as well as material identifiers used to drive automation with the Automation System to Compression System Communication API (SCTE 104 messages) at the beginning of the workflow and transitioning to in-band digital program insertion cueing messages (SCTE 35 markers) as the various ABR streams are produced. The additional metadata flows parallel to the video as SCTE 224 Event Signaling and Notification Interface (ESNI) objects and passes to the automation systems using the Real-time Event Signaling and Management API (SCTE 250). All of these protocol standards work together to allow the out-of-band messaging to carry the playout rights instructions such as blackouts or regionalized content, rewind and fast-forward restrictions as well as dynamic advertising instructions and link those to the video at the frame accurate time they are needed with the in-band markers.

The CDN also provides a logical home for cloud digital video recording (cDVR) capability. This would include short-term generic copies of video files for start over, catch up and playout pause functionality as well as long term end-user specific copies of content for future playout. Since the SCTE 35 markers are included in the content copies, the retained SCTE 224 metadata continues to provide playout rights and provide additional capabilities such as dynamic ad insertion (DAI) for aging recorded advertisements.

In addition to the creation of the multiple ABR streams by the encoders and packagers, the packagers also handle securing of the video content by encrypting the video content. This means that in order to play the video on the distributors’ (e.g., dMVPD) portion of the workflow the distributors’ digital rights management (DRM) system would have to decrypt and re-encrypt the content before serving it to the client. Potentially, the multiple DRM processes could be eliminated by cooperative CP/dMVPD key management and single encryption.

With the video encoded, packaged and encrypted the CP then provides the numerous video formats to its CDN. With the content prepared in an interoperable way, and secured on the CP’s “edge,” the workflow transitions to the distributor side of the workflow, where that content is connected with the distributor’s subscriber so they can watch the video.

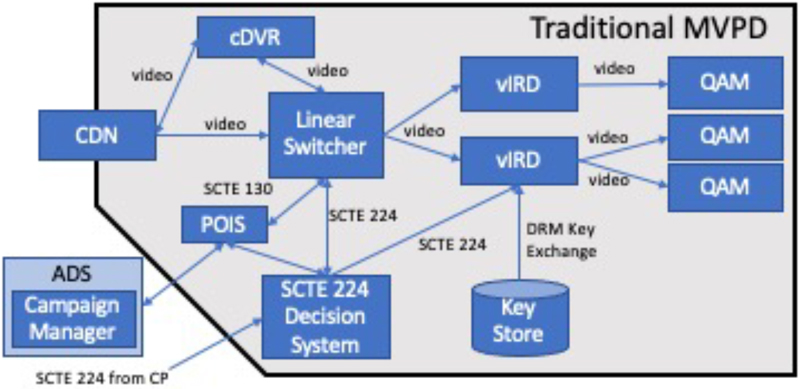

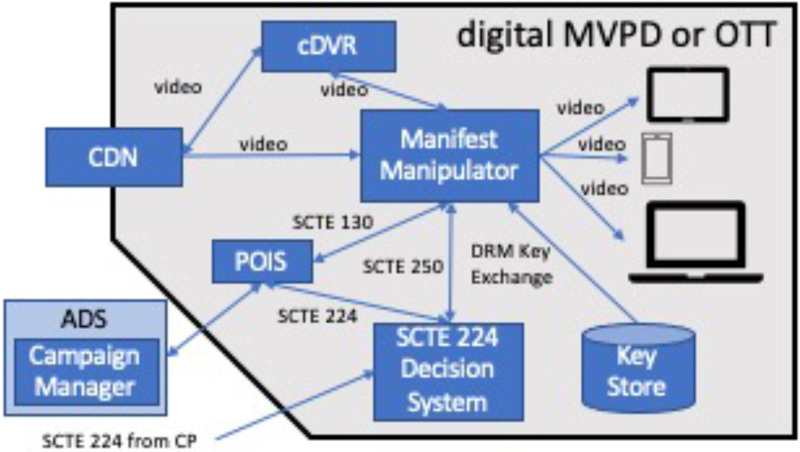

Depending on the distributor, the connection to the CDN and consumption of the video can diverge significantly (Figures 2 and 3). Since the CP has provided numerous versions of the video, interoperability is ensured and no transcoding of the video is required, so all the distributor has to do is retrieve the video segments from the CP’s CDN. If the distributor has a CDN already established in their environment, then they can just deep link their own CDN with the CP’s CDN and pull the format of encoded video for their playout stack. Additionally, the distributor can establish gateways to pull the appropriate format of the encoded video into their own workflow to make it available to their playout system.

Figure 2. Traditional MVPD flow

Figure 3. Digital MVPD or OTT flow

With the content at the distributor’s front doorstep the distributor can take those individual ABR streams and pass them to their playout systems via manifest manipulators that choose the right formatted video for the players based on the players’ capacity, bandwidth, screen size, and processing power. If the distributor is a traditional cable MVPD they will use linear stream stitchers or other processing devices to convert the streams to multicast for distribution over traditional quadrature amplitude modulation (QAM) distribution plants as single program transport streams (SPTS) to traditional set-top boxes.

The last piece of the workflow is the insertion of advertising into the linear streams or digital program insertion (DPI). Advertisements can be inserted in many places along the workflow, depending on how dynamic the CPs want the advertising to be. As shown in each figure the decision about which advertisement to place in the stream is done by an advertising decision service (ADS). The CPs and distributors alike use ADSs to manage inventory, for creative sales, and to establish campaigns. However, none of that matters if the advertisements are not placed in the video and seen by a consumer. To start with, the CPs themselves can place advertisements in the video streams during their encoding and packaging process. This can be accomplished by the automation systems calling the ADS during encoding/packaging. This allows the stream to have advertisements placed in the video to playout as “baked in” advertisements. These play in the video if no dynamic advertising is enabled or if the advertising breaks are designated as breaks exclusively for the CP. For advertising segments that are designated for the local or distributor availability, the identified advertisements are replaced by the distributor. The distributor handles the splicing of these new advertisements by substituting their advertisement content via the manifest manipulator for unicast users or prior to the conversion to multicast for distribution using traditional QAM delivery.

As a result of this end-to-end workflow video interoperability is ensured, there is no need for transcoding, thus audio and video quality remain high and the CP can be assured the playout quality is high, because they encoded it and placed it in their CDN. Additionally, this workflow is not constrained by satellite delivery changes or capacity limits as new formats like 8K and UHD become more popular with consumers. Additionally, out-of-band rights management allows a single contribution channel to be regionalized and blacked-out at the packager, linear stream stitcher, or the manifest manipulator, eliminating the need for multiple feeds via satellite transponders and integrated receiver/decoders (IRDs) spread throughout the country or the world.

Although this is a model for a ready for the leap toward video interoperability, there are areas ripe for exploration and advancement. These areas are outside the scope of this overview, but some of those items include single encryption, key exchange, the proliferation of personalized manifests for the unicast playout in OTT, and frame accuracy for segment splicing in a single encode environment. But even with room for improvement the technologies discussed above are evolving rapidly. The standards identified in this architecture are also evolving and the SCTE Digital Video Subcommittee, especially the Digital Program Insertion Working Group continue to develop and extend the standards referenced here, allowing CPs, distributors, and equipment manufactures to work together to see this through.

This architecture provides true video interoperability, increased video quality and scalability for the future.

Craig Cuttner, Yasser Syed, and Dean Stonebeck contributed to this publication.

Stuart Kurkowski,

Comcast Technology Solutions

Stuart Kurkowski is a Distinguished Engineer at Comcast Technology Solutions, serving as an architect for live/linear service offerings and the CTS Linear Rights Manager, a market-leading SCTE 224 engine. He is a contributor to SCTE’s 130, 224, and 250 standards.

Joel Derrico,

Cox Communications

Joel Derrico is a Principal Engineer at Cox Communications. He is a recognized leader in the areas of video compression, processing and distribution and contributor to SCTE’s 35, 130 and 224 standards. Prior to joining Cox, Joel worked at Scientific-Atlanta, where he created several industry-defining products.

Image: Shutterstock