The GAP

By Ed Dylag

No, I am not writing about a clothing store or the Tube in London, but rather about an SCTE specification called the Generic Access Platform — GAP.

The GAP specification will standardize node housings found in a cable operator’s outside plant primarily hanging on strands strung between poles or in street side cabinets. Standardization will help streamline deployments in the usual ways by minimizing training, sparing, toolsets etc. The standardization process at its most basic level will bring tremendous benefits to operators who will be deploying more nodes and more sophisticated nodes as their networks evolve. But the most exciting aspect of GAP for me is the possibilities GAP creates for hosting new access technology and applications in the outside plant.

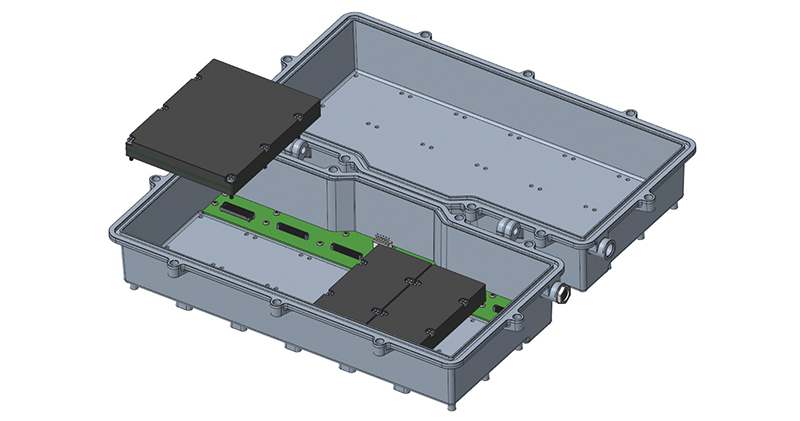

First, the basics. GAP is a specification that will standardize ‘hybrid fiber/coax’ or ‘HFC’ node housings. Notice that I used quotes around these traditional cable network oriented terms — it will become obvious why as you read on. The GAP specification will standardize the mechanical, power and thermal parameters of a node housing. So GAP defines all the basic elements like overall size and shape, port entry locations, hinge design, etc. Digging a little deeper, the specification also defines the mechanical and electrical characteristics of a number of internal backplanes. This is where things get more interesting.

You can think of the GAP backplanes as containing a number of sockets into which any GAP-compliant module can be installed — as long as that module is the right shape and size and has the correct mating connector and electrical interfaces. All of these parameters are defined in the specification. There are several backplane options defined, which provides flexibility in module design.

On the ‘base side’ or the side attached to the strand, there are backplanes primarily designed for power and RF distribution — e.g., to plug in power supplies, RF modules or RF trays. I stress the word primarily because the specification will not specify module functionality. This means the sky is the limit in terms of what your module does — as long as it complies with the mechanical, thermal and electric specifications for that particular module socket. In fact, there are several GAP use cases envisioned that won’t even require RF. More on that later.

The mating ‘lid side’ is designed primarily to host access personality modules (there’s that word primarily again). Two distinct variants of backplane exist for the lid side — low-speed and high-speed. The low-speed variant includes power and telemetry pins. The low-speed variant will be most useful for standalone modules that don’t need to communicate with other modules — except to relay telemetry and status information. The high-speed variant adds PCI Express and Ethernet for high speed data communications.

This high-speed backplane variant is really the key reason GAP is not ‘just another node housing’ — not to minimize the importance of standardization of the other elements within GAP. Let me explain by now pivoting to what you can do with GAP.

Cable networks continue to evolve. In the early days, these networks were really just a series of amplifiers, line extenders and taps to extend RF from headends and hub sites into subscribers’ homes over coaxial cable. As operators began extending their reach via fiber into their outside plants, they needed a way to convert the signals on this fiber back to RF utilizing coaxial cable over the ‘last mile’. Enter the HFC node — which provides the genesis of GAP. These HFC nodes are designed to handle only RF modulated signals and really only perform ‘Layer 1’ translation.

As cable operators move toward distributed access architectures or DAA, nodes now must deal with higher layers of processing and move into the Internet Protocol (IP) domain. Additionally, operators are deploying PON and even wireless access networks to expand their revenue-generating service offerings. Introduction of these new services of course offer great new opportunities to operators, but also introduce great challenges to the legacy HFC node.

Certainly, the modular nature of GAP will provide a great deal of adaptability to operators who will deploy a mix of access types across their networks. As an example, a GAP node can host a remote OLT module to service a new housing development with PON and another GAP node can host a remote PHY device (RPD) to increase bandwidth in an existing neighborhood. Yet another GAP node can host a wireless small cell to provide outdoor CBRS coverage. All of these access types can be served using the same GAP node housing design simply by populating each GAP node housing with the appropriate module type.

Each of these access types can be implemented in what I’ll call a traditional approach where a standalone module implements the entire network stack — similar to what is done today with RPD modules. This standalone module approach is perfectly valid with GAP using the low-speed backplane option.

But now, back to the high-speed backplane I mentioned earlier. As access networks evolve to become IP-based, I expect that nodes more and more will begin to be designed, deployed and managed like standard datacenter elements. It just makes a lot of sense and the parallels between a compute node and a GAP node are unmistakable.

Let me first back up a step and say that I don’t expect the IT shop within cable companies to take over outside plant node management any time soon. I believe node vendors will continue to provide the tools, interfaces and hardened technology that operators have become accustomed to in order to maintain a high reliability access network. But under the hood, there will be a migration toward standard off-the-shelf hardware and software components inside GAP nodes.

This migration to standard off-the-shelf hardware and software is already happening in the headend as operators transition to vCMTS. We see the trend again in 5G wireless networks with virtual radio access network (vRAN) deployments. And yet again with PON pluggables and virtual broadband network gateways (vBNG).

But why? Underlying all of these trends is the key tenet that everything that can be implemented in software should be implemented in software. Again, why? Because software is cost effective; has relatively low barriers to entry; is easier to upgrade and therefore more future proof. Also there exists a huge number of re-usable building blocks in the form of commercial and open source offerings. Further, you can readily run more than a single function (i.e., application) at a time which provides opportunity for resource sharing and pooling that you just don’t get with purpose-built devices. On to some examples.

With a GAP high-speed backplane, vendors can create a compute motherboard which controls a number of network interface modules which provide the correct access type for a given deployment. For example, a RPD network interface module coupled with a compute motherboard which runs the DOCSIS MAC layer equal a remote MAC PHY device (RMD). Similarly, an Ethernet module with a PON pluggable coupled with a compute motherboard equal a remote OLT device. In the wireless RAN space, the compute motherboard can run baseband processing while a cable modem network interface module provides mid-haul to the non-real-time RAN components upstream in the network.

What gets really interesting is the ability to mix and match and to use the motherboard as a shared resource. If you add a PON pluggable into a DOCSIS RMD node, you can now serve high value PON customers out of the same node with the addition of the right software load. Very powerful, indeed!

And by the way, once there are compute resources in the node, operators will be very well positioned to deploy emerging edge compute applications like AI for security video filtering, CDN for popular game downloads and smartphone operating system upgrades as well as ultra-low latency applications.

So, as you can see, the GAP specification will not only provide deployment streamlining through standardization and modularization, but also opens up a whole new group of opportunities to operators.

GAP specification work continues with revision 1.0 expected in early 2021. The GAP working group consists of the majority of traditional HFC node vendors as well as a number of newcomers interested in pursuing new opportunities enabled by GAP. Currently, the group is focused on development of a CAD model to accompany the specification.

There is still opportunity to join the working group. To join, contact standards@scte.org or go to https://scte.org/standards-join.

Ed Dylag,

Market Development Manager,

Intel Network Platforms Group

Ed is currently responsible for driving network functions virtualization (NFV) and media opportunities in the cable and wireless industries. In his 20 years at Intel Ed has held various roles in product management and segment marketing in IP telephony, routing and switching and network access. Ed got his start in communications 30 years ago as a software engineer and holds a BS Electrical Engineering degree from the State University of New York at Buffalo.