The Cable Network, Immersive Experiences and Lightfields

By Andrew Ip and Dhananjay Lal

Since the start of the cable industry in the late 1940s, the cable network has been delivering the immersive experiences of the day. From broadcast TV, to video-on-demand, to broadband-enabled experiences like over-the-top (OTT) video and multi-player gaming — our networks have always delivered the services our customers desire.

While we don’t know what will come next or what some entrepreneur is cooking up in their garage, we do know that our networks will continue to be prepared for the future.

With fiber-backed networks, a roadmap supporting DOCSIS/10G capability, and the on-the-increment investment required to commercialize multi-gigabit, low latency services — the cable industry is ideally positioned to continue delivering on the future of immersive products and services.

Future immersive experiences

What’s the definition of immersion? “Achieving the illusion of presence, the overwhelming sensation that you are actually there and interacting…”

Almost every major sci-fi film has imagined a world where technology can be used to augment reality with experiences that enhance how we digitally live, work, and play.

Many technologies promise to achieve this true immersion. Platforms such as virtual reality (VR), augmented reality (AR), mixed reality (MR), lightfields and holographic displays are at different places along the technology “S-curve,” as various companies continue to invest significant resources to optimize design, cost, and scale.

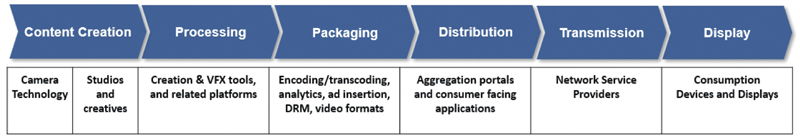

The technology ecosystem, while still nascent, is nearing a point where this future world is closer than we think. However, achievement of this vision will require collaboration and orchestration across the technology ecosystem — from content creators, networks, to end-user devices.

Just imagine being able to experience virtual tourism, collaborating on industrial design with someone across the continent, or sitting next to a family member that is in another country.

Lightfields and holograms

Most of us experience the world via light. Without light we wouldn’t be able to see.

As light originates from its sources, it is absorbed, reflected and diffracted from objects to reach our eyes. The sum total of all these light rays, its intensity and interactions is a lightfield. It’s a truly accurate representation of the 3D space. When captured and displayed, a lightfield enables a new user experience paradigm. A viewer can observe a scene from multiple perspectives, realize shadows, etc.

A hologram is the result of processing a captured lightfield, a depiction of an object or a scene in 3D, ready for distribution or display.

Holographic displays hold the promise of making immersive experiences simultaneously available to multiple viewers without needing to wear a headset.

Realizing Lightfield streaming requires a superior network

Distributing an interactive lightfield is bandwidth-intensive, requires ultra-low latency and is compute-heavy. Delivering this experience requires a network that can support these attributes.

- Throughput: A virtual reality session, delivered at 4K resolution and 90 frames per second using AVC encoding, can easily demand 100 Mbps. Holographic displays will require much more. This 3D media stream may demand many hundreds of Mbps or even multi-Gbps, depending on the content. Advancement in codecs are expected to reduce these needs while maintaining quality.

- Latency: Shared immersive experiences may allow participants to alter their environment, such as objects in their view or even a storyline. These require low latency, typically few tens of milliseconds. This enables the server to receive and process the actuations at a central location and present a consistent holographic view of the 3D world back to all viewers.

- Compute: Immersive experiences require very intensive computing, typically available through stand-alone systems equipped with high-end graphics processing units (GPU). Price friction remains the barrier to wide-scale adoption. Service providers can solve this problem by embedding GPU compute at the “edge” of the network — potentially enabled by cable headend locations.

The SCTE demo

Charter Communications, together with industry partners Visby, SCTE, CableLabs, NCTA, CommScope, Looking Glass Factory and Comcast, demonstrated a streaming lightfield at the SCTE Cable-Tec Expo in October of 2020.

The aim of this demonstration was to show how 10G-capable cable networks have the power to accelerate the development of new, lightfield-based technologies and enable new use cases for the home or enterprise.

The “future of work” demo focused on how a user may interact with a hologram during a design review, in this case a Wi-Fi router, observing an object from multiple angles, exploding and recombining it for a thorough appraisal.

The “future of home” demo showcased a viewer following a yoga instructor, filmed using lightfield capture, for an interactive, full-motion, 3D experience with the capability to pause, rotate and zoom the instructor to better observe a yoga pose.

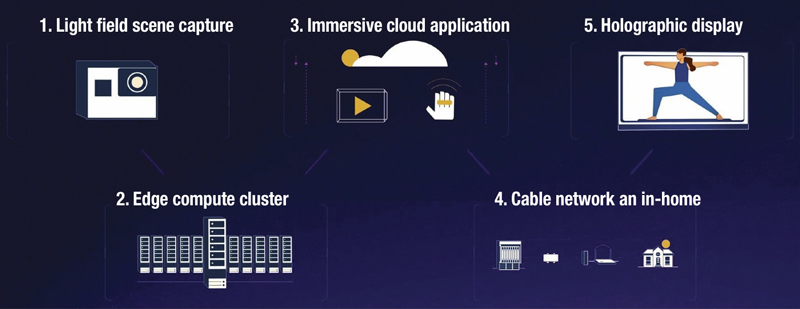

The working end-to-end architecture to deliver these experiences consisted of five key elements.

- Lightfield capture. The photoshoot was one of the first outdoor, “on-location” lightfield captures in natural light. Video feeds captured from 100 cameras (a verged array of 5×20 semi-cylindrical rig) were converted into a lightfield by Visby.The Charter Communications team also rendered synthetic ray-traced animations using a 3D Spectrum Wi-Fi router model for demonstrating the “future of work” demo.

- Edge compute cluster. The Charter team developed an edge compute cluster co-located with the cable modem termination system (CMTS) at the edge of the access network to deliver a 3D hologram that altered based on user gestures. The hologram was distributed over the network using high efficiency video coding (HEVC) at a bit rate greater than 500 Mbps.

- Immersive application. Charter Communications developed the immersive application to showcase how lightfield processing may be used to display the same scene at the holographic display from any one of a multitude of camera perspectives at a time.For each camera view, the scene also had to be rendered from all possible user perspectives supported by the display. On the Looking Glass 8K display, this is 45 views over a 50-degree horizontal arc angle. The significant computation required to generate the hologram in real-time mandated a large number of high-end GPUs — a costly design decision. Therefore, we pre-rendered the content from a discrete set of camera perspectives, while switching from one camera view to another using user provided gestures. We believe this optimization, while reducing costs, retains the immersive quality of the experience. Multiple HEVC encoded files were stored at the edge cluster, each representing the same content, but from a different camera view. One of these files was streamed using User Datagram Protocol (UDP) to the client at a high bit rate, typically 500 Mbps to 1 Gbps. Gestures were recognized at the client by interpreting hand pose and sent using a Transmission Control Protocol (TCP) socket upstream to the server. Each gesture was mapped to a camera view and a valid gesture allowed the server to switch the file during streaming.

- 10G cable and in-home network. CommScope provided the DOCSIS and in-home communication system, which included a 2 Gbps symmetrical access link between the CMTS and in-home modem. This was then extended as a multi-gigabit connection within the home using Wi-Fi 6E leveraging 6 GHz. One 160 MHz channel in the U-NII-5 frequency range was deployed and transmission greater than 4 Gbps at less than 2 millisecond latency were achieved in the Wi-Fi connection between the modem and the holographic Looking Glass 8K display.

- Holographic display. At 32 inches, the Looking Glass 8K display is a holographic screen capable of delivering 33 million pixels in volume, with seamless super-stereo transition that allows everyone in the room to enjoy a 3D experience from their unique perspective.

Conclusion: What can the industry do now to prepare?

Immersive media has the capability to transform productivity and entertainment in the future, and cable networks are poised to play a key role in this transformation.

Realizing this vision will require a close collaboration between cable companies and industry — just like the Immersive Digital Experiences Alliance (IDEA) recently formed by CableLabs, Charter and Cox, along with industry players like Otoy, GridRaster, PlutoVR, Lightfield Labs and Looking Glass Factory — to create immersive interchange formats.

The SCTE Cable-Tec Expo demonstration was a powerful example of how cable industry partners came together to showcase a novel application that would need an entire ecosystem for delivery at scale. Continuing on this momentum, we believe the near future offers an opportunity for operators to create a “10G sandbox” platform for immersive technology innovation that may be used to evaluate the performance of candidate technologies over the network, and evangelize the best ones.

Andrew Ip,

SVP, Emerging Technology and Innovation,

Charter Communications

Andrew leads the Advanced Technology and R&D capabilities at Charter. Previously, he was at Madison Square Garden Ventures, and at Cablevision led the infrastructure engineering and wireless technology functions, as well as the Optimum Labs group.

Dhananjay Lal,

Senior Director, Emerging Platforms and Technologies,

Charter Communications

DJ leads ecosystem related R&D activities at Charter. Previously, he has held product management, engineering and research roles of increasing responsibility at Bosch, Eaton, Emerson and Time Warner Cable.