5G Low Latency Requirements

By Ronan McLaughlin

5G brings together a new radio (NR), and a cloud native 5G core (5GC) enabling a service-based architecture implemented as microservices deployed in containers. The 5G architecture also delivers a true separation of control plane (CP) and user plane (UP) functionalities, which enables advanced network slicing capabilities. This in turn allows a single network architecture to support a multitude of diverse use cases, each of which can require different network service characteristics (bandwidth, latency, jitter, number of simultaneous connections, connection persistence, etc.).

The 5G system design parameters specify a system capable of delivering an enhanced mobile broadband (eMBB) experience in which users should experience a minimum of 50-100 Mbps everywhere and see peak speeds greater than 10 Gbps with a service latency of less than 1ms while moving at more than 300 miles per hour! In addition to delivering enhancements to the mobile broadband experience, a 5G system is also designed to address massive machine-type communications providing scalability and flexibility for deploying Internet of Things (IoT) devices with battery lives exceeding 10 years of deployment time.

The third area of design focus for a 5G system is for the end-to-end system to have extreme availability greater than 99.999%, and to be capable of rendering ultra-reliable and low latency communications (URLLC) with less than 5 ms end-to-end system delay. The URLLC reliability requirement for transmission of a 32-byte packet is 1-10-5 with a user plane latency of 1 ms corresponding to a maximum block error rate (BLER) of 10-5 or 0.001%. To deploy URLLC type services there are several contributory factors that need to be considered, for example: appropriate spectrum selection for coverage and capacity; radio access network (RAN) transport selection; and 5G core network architectural options. We shall examine some of the selection criteria for these options in the remainder of this article.

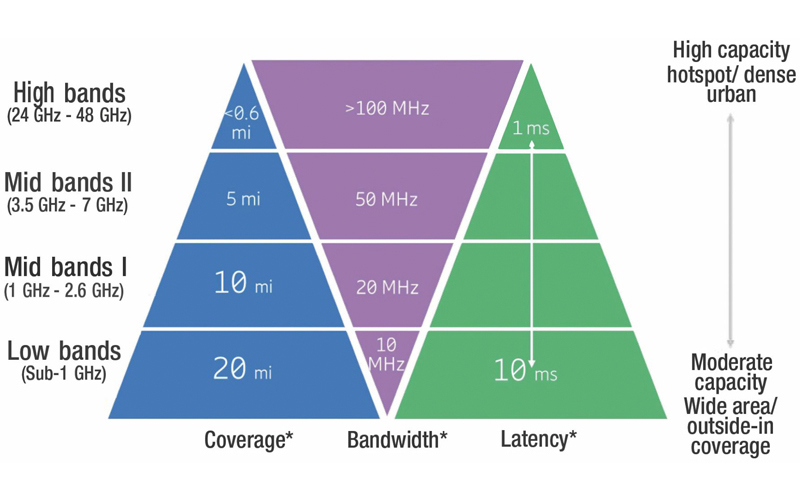

Deployment of any 5G service requires an analysis of available spectrum options and choosing an appropriate spectrum range or combination for the target use case; this is especially true in the case of low latency services. To achieve the higher data rates typically associated with 5G systems, wide channel bandwidths are required. Spectrum bands with a default channel bandwidth of 50 MHz or 100 MHz usually reside in the high-band spectrum range; however, 5G systems can use any available spectrum and are not limited only to High-Band or millimeter wave spectrum. Multi-layer and carrier aggregation capabilities can be leveraged throughout all bands to achieve desired results. As such, 5G should be considered as an evolution that can build on all spectrum assets.

There are three spectrum classifications: low band, mid-band and high-band. Low band spectrum is below 1 GHz and generally considered as the “beachfront property” of all spectrum due to its wide-area coverage characteristics and its ability to provide outside-in and deep indoor coverage. 5 MHz, 10 MHz and 20 MHz are the typical deployed channel bandwidths in this spectrum range. Low band spectrum is the basis for a lot of existing 2G, 3G, and 4G services — as well as IoT — and will continue to serve these segments in the future. Low band spectrum will be used for 5G applications that do not require super low latency and high persistent data rates and is typically optimized for macrocell (wide-area coverage) network deployments.

Mid-band spectrum is usually considered as spectrum above 1 GHz and below 6 GHz but in some cases can address spectrum all the way up to 8GHz. There are a lot of capabilities unlocked in this frequency range with licensed, lightly-licensed (e.g., CBRS 3.55 GHz to 3.7 GHz) and unlicensed spectrum (e.g., 5 GHz) deployment models. Planning is in place to make more mid-band spectrum available in the coming years — for example C-Band (3.7 GHz to 4.2 GHz), in which 50 MHz and 100 MHz channel bandwidths are achievable depending on spectrum allocation. The coverage, throughput, latency and capacity characteristics of mid-band spectrum make it an excellent candidate for deployment of 5G URLLC services. These characteristics can further be improved by combining mid-band and low band spectrum assets. Mid-band spectrum is typically optimized for macrocell deployments and/or small cell deployments between 3 GHz and 6 GHz. Collectively bands from 450 MHz to 6 GHz are known as 5G Frequency Range 1. Initial mid-band 5G deployments in the U.S. will happen in the 2.5 GHz band.

So where are the ultra-low latency benefits promised by 5G, you ask? They’re in the third spectrum classification — high-band — in which bi-directional multi-gigabit speeds and ultra-low latencies really come to life. High-band spectrum can refer to anything above 12 GHz and within this classification is millimeter wave spectrum which extends from 30 GHz to 300 GHz. Initial 5G deployments here are targeted in the 24 GHz to 52 GHz frequency range collectively known as 5G Frequency Range 2. In the U.S. the first commercial systems are being deployed in 28 GHz and 39 GHz bands. At these frequencies, channel bandwidths are typically either 50 MHz or 100 MHz, with the potential to aggregate multiple contiguous channels together to enable bi-directional multi-gigabit services. In addition, the form factor of antenna elements operating at these frequencies is relatively small such that they can be packed together in dense arrays to enable massive system capacities.

Of course, there can be some disadvantages when operating systems in these high-band frequencies, including attenuation due to foliage or atmospheric conditions. These limit the ability of high-band systems to serve wide-area coverage-based use cases. However, an effective combination strategy with low band or mid-band spectrum can overcome these disadvantages and yield an optimal network that can perform a multitude of functions and use cases like fixed wireless access (FWA), eMBB, Massive IoT and URLLC.

5G system design introduces a virtual radio access network (vRAN) architecture in which higher layer RAN functions (Layer 3) are centralized in the cloud. This introduces new transport network latency requirements to facilitate the split of radio functions when the one-way latency objective between the 5G radio site and the vRAN node location is typically 5 ms. This latency budget is achievable in a variety of transport network technologies including low latency DOCSIS (LLD) and optical fiber transport networks. The vRAN node can be connected to a 5G EPC or 5GC site using Ethernet switching or a traditional routed network; however, these choices can introduce additional latency in the end-to-end network as well as other challenges like distribution of timing, achieving sync and deploying large quantities of 10 Gb ports. For URLLC use cases the end-to-end latency requirement is a mere 5 ms, requiring heavy reliance on fiber and optical switching networks at the transport layer.

There are several core network architectural options available to introduce and deploy 5G networks. The two dominant options are Option 3: non-standalone (NSA) and Option 2: standalone (SA). There is no difference from an end-user perspective — a 5G device will connect to a 5G base station in either case. The main differences between the two choices are in the form of the core network, its capabilities and how the radio network interacts with it. In the Option 3 case an existing 4G evolved packet core (EPC) is upgraded to support 5G NR user plane traffic from a radio base station and the control plane traffic is tunneled through a 4G base station back to the 5G EPC, which is capable of dual mode 4G/5G operation. A consequence of this is that new quality of service (QoS) class identifiers (QCIs) are added to the 5G EPC so that it can appropriately prioritize and handle traffic from new 5G use cases such as URLLC to build on top of the existing 4G QCI framework for traffic prioritization. In the Option 2 case, a 5G base station interacts with a cloud native 5GC; here new 5G QoS class identifiers called 5QIs have been defined to allow a 5GC to prioritize traffic appropriately. It is the Option 2 architecture that will be further developed and optimized for URLLC type services but from a core perspective either architectural option is viable, provided the end-to-end system latencies are preserved.

In conclusion, LTE, LTE/NR NSA and SA 5G networks can support URLLC type services with the right spectrum combination and provided that the radio and core network sites are connected with a sufficiently capable low latency transport network. It is the transport network engineering which is the real key to the successful deployment of low latency services. The right choice of transport network (DOCSIS, LLD, optical, Ethernet, IP routed) is highly dependent on the use case that is being addressed and its specific end-to-end latency requirements. There also are many techniques under development at the radio layer in NR to support URLLC and other low latency use cases such as scheduling optimization using mini-slots, instant uplink access, and fast processing. These can be explored in detail in future articles.

Ronan McLaughlin is a Technology Solutions Principal and Wireless Strategy Lead for the Cable Customer Unit at Ericsson. He has more than 20 years of unique experience in IP and ICT fields, spanning fixed and mobile network technologies. McLaughlin holds a Bachelor of Engineering degree in Electronic Engineering from University College Dublin, Ireland and has completed the SCTE•ISBE-Tuck Executive Leadership Program at Dartmouth. He also serves on the SCTE•ISBE Cable-Tec Expo 2019 Program Committee.