“Hey, OFDMA, Why Are You So Sensitive?”

By Chris Topazi

In previous articles in this publication, we have discussed OFDMA, its benefits and some of the lessons learned in our deployments. In this article, we seek to address a question that we are asked quite frequently from our leadership and from our field personnel—why does OFDMA appear to be more sensitive to noise than our traditional ATDMA upstream, and why do need to have superior plant performance in nodes employing OFDMA?

The short answer is that OFDMA is more sensitive to noise and ingress because we are:

- Operating at MUCH higher modulation orders, and thus, we need higher signal-to-noise ratios

- Using more spectrum

- Using a SINGLE channel, as opposed to multiple channels

We will dive into each of these areas briefly below.

Modulation orders

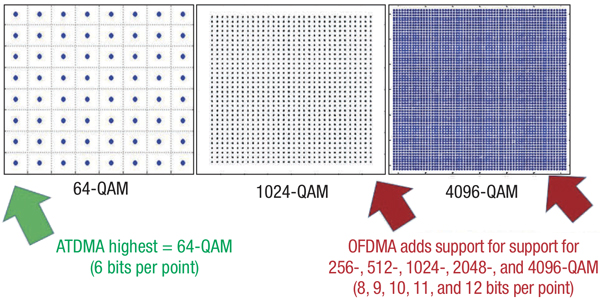

Modulation order is, in its simplest form, the number of possible QAM symbols within a constellation pattern. It is important to note that the constellation pattern area is the same, regardless of the number of possible symbols one chooses to pack into it. Therefore, as modulation order increases, you need a better signal-to-noise ratio, or to be technically correct for QAM, modulation error ratio (MER), to correctly determine the correct symbol location. Below is an example of what these constellations look like to show just how much more dense the constellations are in these higher modulation orders.

Higher modulation orders mean higher capacity—because each point in the constellation is represented by a higher number of bits. So, for every QAM symbol (or constellation point), more bits are being transmitted with higher modulation orders. The presence of noise, though, makes the constellation “blurry” to the receiver—which means that the receiver of the data can’t determine which point in the constellation was transmitted. For less dense constellations, you can tolerate more error in the “location of the dot” at the receiver, because the distance from adjacent signals (or technically, what is known as the “decision boundary”) is greater. For denser constellations, or higher modulation orders to work, the signal must be cleaner. It is also important to note that with higher modulation orders, if you lose a single symbol, you have lost more bits. It’s definitely a risk/reward tradeoff. So, why do this?

We are being asked to use these higher modulation orders to get higher bandwidth capacity out of the spectrum in use. These higher modulation orders are the key to being able to provide the competitive products that we are being asked to deliver. They also offer higher bandwidth capacity, which has the positive impact of deferring node actions such as splits and segmentations. As an example, a mid-split OFDMA channel at 40 MHz to 85 MHz operating at 64-QAM has a capacity of 201 Mbps vs. a capacity of 395 Mbps when operating at 2048-QAM.

This comes at a cost. With ATDMA, the network only must support 64-QAM, which works flawlessly down to a limit of about 27 dB MER, and has a hard lower limit of about 24-25 dB MER before uncorrectable FEC errors begin. With OFDMA, we have options as high as 4096-QAM.

A more accurate description of the issue is that OFDMA is more sensitive to intermittent noise. The system is set up fine to work with constant noise, and in fact a process and PMA solutions have been designed to even use profiles that avoid severe known ingress. It’s the intermittent noise that is an issue because OFDMA also uses dynamic modulation orders.

OFDMA modems complete a probing cycle at registration and periodically thereafter to determine the modulation order that can be used. If the plant is clean at the time of that probe, a high modulation order will be used. If noise then becomes present in the plant between probing cycles, the signal-to-noise ratio is no longer able to support the modulation order that has been assigned. This results in uncorrectable FEC errors, which is perceived as packet loss for the customer.

As an example, take a node where the MER performance varies between 28 dB at the worst and 38 dB at the best due to common path distortion or similar effects. We’ve seen many examples of these in the field.

For ATDMA, it can vary in that range all day, and no one sees the impact because it remains above the level of 27 dB MER that is required for error-free 64-QAM operation. ATDMA is not impacted simply because it never tries to run any higher modulation.

For OFDMA, if the probe occurs at the point where the MER is 38 dB, the modem will be assigned 2048-QAM. When noise then becomes present in the node, and the MER drops to 28 dB, 2048-QAM is no longer able to be used. Modems in the node begin experiencing uncorrectable FEC errors, the CMTS recognizes it and begins shifting the modulation order down, finally getting to 256-QAM, where OFDMA can operate at 28 dB MER. Until the CMTS can downshift all the way to 256-QAM, the customer experiences packet loss.

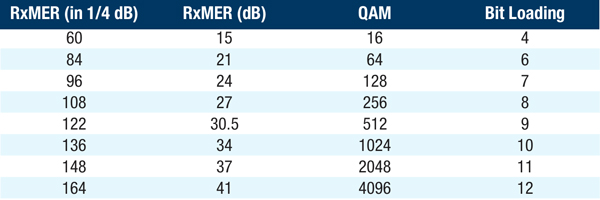

The accompanying table shows the modulation orders in use and MER required as configured in the Cox implementation.

What’s interesting in looking at that table is that OFDMA performs BETTER than ATDMA for a given MER value. This is due to a stronger forward error correction algorithm. It’s the dynamic modulation orders and in the presence of intermittent noise, where OFDMA is disadvantaged, but this is the price you pay for using higher modulation orders and the associated gain in capacity.

More spectrum

With OFDMA, we are expanding into the 40 MHz to 85 MHz region. We had previously deployed four additional ATDMA channels in mid-split nodes as well, but they were only using roughly 25 MHz of that 45 MHz spectrum. In addition, a concerted effort was made in the assignment of those ATDMA channel locations to avoid known ingress sources.

While we do have some modified profiles with OFDMA to avoid over-the-air TV broadcasters, other noise, and ingress sources elsewhere in the band of interest will interfere with the OFDMA channel to some degree. This may not be the case with ATDMA—as the channels may not be located at the same frequencies of those noise sources.

OFDMA operates as a single channel

OFDMA is quite resilient due to the channel comprising many narrow subcarriers. However, under certain conditions, including wideband noise and other effects, it is still a single channel and can lead to severe loss of capacity when impaired. There are also some special cases we have observed in our network that can require more advanced troubleshooting.

In instances where consistent narrowband noise is affecting an OFDMA channel, we have methods to deal with this—PMA is one, creating IUCs with variable bit loading; unused bands is another. These work well when the narrowband noise is consistent. However, what happens when the narrowband noise is intermittent?

Because there are multiple ATDMA channels, it becomes quite obvious which channel is impacted by narrowband intermittent noise. With ATDMA, if the noise is bad enough under a single channel, that channel will be removed from use or will be observed to have FEC errors, and it is clear which 6.4 MHz band contains the noise. Should the channel be rendered inoperable, in our case, the majority of upstream capacity is maintained, as seven of eight channels are still in an operational state. Should we seek to avoid that band, we know where it is and can adapt our upstream channel plan accordingly.

With OFDMA, a modem can be scheduled at any time in any 400 kHz minislot within the channel, so if there is intermittent narrowband noise present that is missed by the periodic probing measurements, over time, a modem will experience the impact of it. When this occurs, modems begin to be impaired on the entire OFDMA channel due to the FEC errors that are generated by this intermittent noise. If this results in a partial service state, it represents a rather large loss of capacity. It can also be challenging to localize the noise within the spectrum, as the errors are reported on the entire channel, not a minislot basis. Until the noise is localized within the band, it is difficult to address it with techniques discussed above.

Interestingly, in the downstream, OFDM is not as sensitive to this intermittent narrowband noise. This is because of one of the key differences between OFDM and OFDMA—the minislot concept. Modems are granted 400 kHz slices of bandwidth in the upstream, so narrowband noise that exists in the location of a modem’s grant results in data within that minislot having a very low chance of being received properly. With OFDM, the subcarriers containing a modem’s downstream data are scrambled and “spread” across the channel, so a lower percentage of information for a single modem is lost due to narrowband interference, and it is more likely that it will be corrected by the LDPC FEC algorithm. This is really a key difference, as many people expect that OFDM and OFDMA are more similar than they actually are.

We hope that this helps others to understand the importance of improved plant maintenance and performance to achieve optimal OFDMA performance and helps to answer some of the questions around OFDMA and its strengths and weaknesses. The more people understand, the more likely it is that we can drive the industry to determine best practices for this exciting new technology and provide even better reliability and products for our customers.

Chris Topazi

Chris Topazi

Principal Architect

Cox Communications, Inc.

Chris Topazi is a Principal Architect at Cox Communications, where he is responsible for testing and development of deployment guidelines for DOCSIS 3.1/4.0 technology. He has worked in the cable telecommunications industry for nearly 25 years, including the past 10 years at Cox. Prior to joining the Cox Communications team, Chris designed and developed products for both headend and outside plant applications at Scientific Atlanta and Cisco.

Figure and table provided by author