Enabling Low Latency DOCSIS to Improve Latency and Bandwidth Utilization

By Robert Ferreira and Barak Hermesh

Over the last three decades, residential broadband technology has been advancing at a fast pace, from dial-up connections to gigabit services. The technology advanced in several vectors including manageability, reliability, security and privacy. One vector in particular drove major innovations and investments — bandwidth. Residential broadband services are defined solely by the bandwidth, and in specific downstream bandwidth.

Recently, network latency has emerged as a key experience indicator for highly interactive services like online gaming. Notably, the 5G standard includes latency as a key component of the ultra reliable low latency communications (URLLC) concept, paving the way for greater focus on latency improvements in other communication technologies.

In January 2019, there was also a major update to the DOCSIS 3.1 specification to add low latency DOCSIS (LLD) to the standard. The target of LLD is both reducing the median latency for all traffic, as well as providing deterministic low latency for selected traffic flows. LLD targets 1 ms queuing latency and an overall <10 ms latency round trip time within the access network.

This article discusses the impact of the major techniques introduced in LLD on latency and bandwidth utilization and suggests mitigation options for issues related to bandwidth waste. The data discussed here was collected from experiments conducted in an Intel lab where gaming endpoints and test equipment were integrated into a DOCSIS network.

LLD lab study

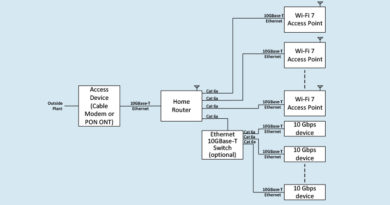

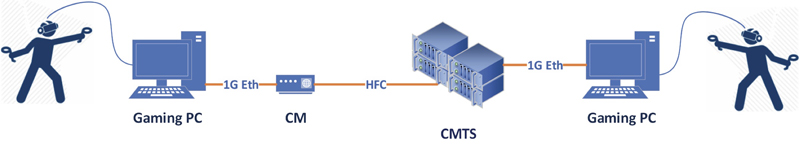

In order to study the impact of LLD on gaming latency, two powerful gaming PCs with VR headsets were connected as shown in Figure 1.

Two major tests were performed:

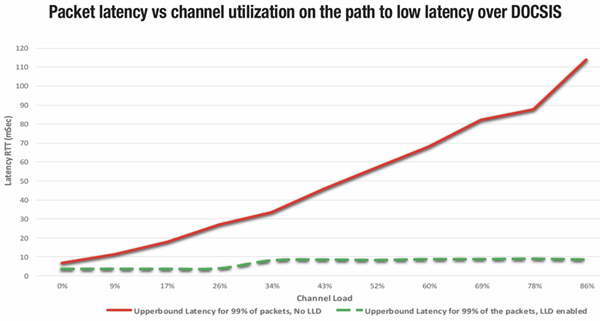

The first test measured the impact of background upstream traffic on packet latency between the two PCs. The test was performed first with legacy DOCSIS 3.1 and then reproduced with two LLD techniques enabled — multi service flow and proactive granting. The latency sensitive flow was classified to a dedicated latency service flow while all background traffic was classified to the default service flow. For the latency service flow, the CMTS was configured to provide proactive grants large enough to accommodate a single packet at a cadence of 5 ms. The test was performed by incrementing the background traffic in steps of 1 Mbps and testing the roundtrip time for latency sensitive traffic at once per second for 200 seconds at each step.

As seen in Figure 2, at an upstream channel utilization of 55%, the roundtrip time is 60 ms and at high loads latency climbs to over 100 ms. The 60 ms bar is where experienced online gamers start sensing latency and choose to quit the online game session. When applying LLD, latency remains bounded at <10 ms.

The goal of the second test was to estimate the inefficiency in LLD proactive granting. Proactive granting is tuned to specific latency targets by setting the size and cadence of grants for the latency service flow, so for tighter latencies, more bandwidth is required. Due to the need to meet latency targets for the 99th percentile of packets, proactive granting has to be tuned to the extreme upper percentile of instantaneous throughput. Given this, in most cases the cable modem does not have enough data to send when being given a time-slot for transmission (grant), resulting in wasted bandwidth.

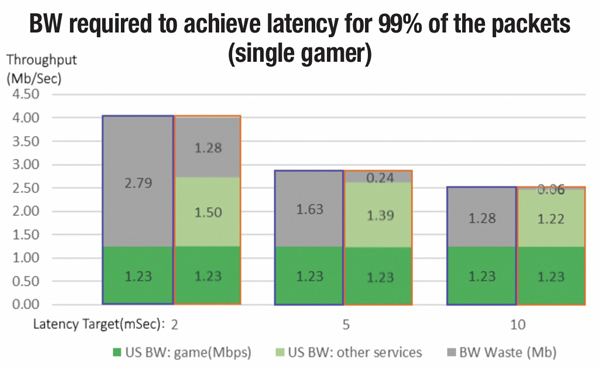

In the test, the two PCs conducted a peer-to-peer online VR game named The Unspoken for over a minute. The test captured and analyzed 11,700 packets. Proactive granting was then tuned to meet three latency targets: 2 ms, 5 ms and 10 ms. For each target, the bandwidth waste was calculated. Figure 3 shows a summary of the results for three latency targets. The results in the blue-outlined frames are discussed in this section while the ones in the orange frames are discussed in the next section.

The average game upstream throughput is 1.23 Mbps. The results, as shown in Figure 3 indicate that for a tight 2 ms latency target, roughly 70% of bandwidth, or 2.79 Mbps is wasted and at 10 ms latency, 50% or 1.28 Mbps is wasted.

Bandwidth efficiency under proactive granting

As Figure 3 indicates, bandwidth waste may be significant. The current LLD standard does not allow DOCSIS grants to be allocated for low latency traffic to be reclaimed for other traffic. If this limitation is removed and DOCSIS grants can be shared across all traffic, a significant amount of the lost bandwidth can be reclaimed.

To validate this assumption, we added a security camera SD stream with average throughput of 1.5 Mbps in the background and re-ran the game test. We also changed the cable modem logic such that proactive grants exceeding the game rate can now be reclaimed for transmission of the security camera video stream. The results are collected and shown in Figure 3, with orange-outlined frames. With grant sharing, the bandwidth loss in all three latency targets is significantly reduced and at the 10 ms latency target it is reduced to 60 kbps — a truly negligible number.

Conclusions and next steps

With the latency lab test, we have demonstrated the effectiveness of both multi-service flow and proactive granting techniques in providing robust low latency over the DOCSIS network. We have demonstrated that without LLD, latency will likely be too high for online gaming.

We have shown how, while proactive granting is necessary for providing low latency for gaming, tight latency requirements result in bandwidth waste that might become significant. Next, we demonstrated how grant sharing can be used to mitigate the bandwidth waste.

While not a part of LLD, grant sharing could be introduced into LLD if bandwidth loss becomes a concern in the field.

Although DOCSIS now has the mechanisms to provide low latency services, if devices in the home connect to the cable modem over Wi-Fi, end-to-end latency will often be too high. Therefore, we believe low latency over Wi-Fi should also be explored including possible mitigations in order to help all cable subscribers realize the full benefit of low latency DOCSIS.

Robert Ferreira,

Robert Ferreira,

General Manager, Connected Home Division,

Intel

Bob is a General Manager in Intel’s Connected Home Division where he is responsible for strategy, technology, product planning, standards, and ecosystem development. He also serves as president of the Prpl Foundation. Bob previously led Intel’s Cable Business Unit from its inception in 2008, and has more than 30 years of experience in the wireless and wireline communications industry.

Barak Hermesh,

Barak Hermesh,

Principle Engineer, Connected Home Division,

Intel

Barak is a Principle Engineer in Intel’s Connected Home Division where he is responsible for future network transformative technologies such as low latency communication, 5G backhauling and network virtualization. Barak has also been a long-time member of standards bodies for various cable communication technologies, and has more than 18 years of experience in the wireless and wireline communications industry.